Google is quietly reshaping the web — not with fireworks, but with a few lines of AI just beneath your browser’s surface.

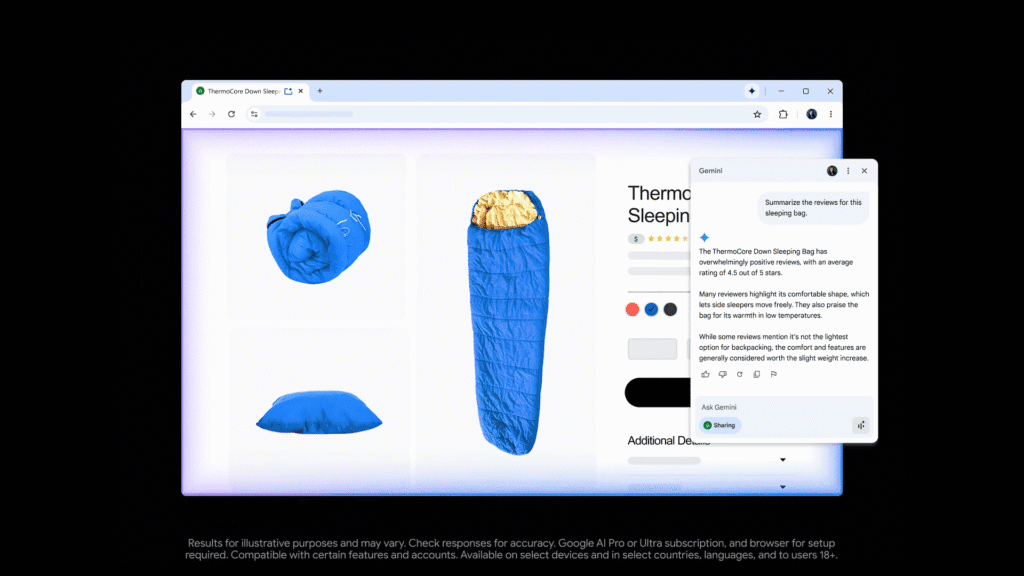

Last week, Google began rolling out Gemini inside Chrome, starting with the side panel assistant feature for U.S. users. If you’re signed in with a personal Google account, typing a query into the sidebar opens a lightweight Gemini interface, letting you ask questions, summarize pages, or rewrite content. It’s the kind of update you might miss — unless you’re watching closely.

But make no mistake: this isn’t just a helpful new tool. It’s a preview of something bigger.

“This is just the beginning of Gemini becoming your agent on the web,” said Google SVP Sissie Hsiao during the company’s I/O 2024 keynote.

Gemini’s move into Chrome is subtle — and perhaps deliberately so. After the ambitious but bumpy rollout of Google Assistant, the company appears to be taking a more gradual, integrated approach this time around. Instead of launching a bold, standalone product, Gemini is seeping into the apps we already use daily, especially the browser.

Agents, not just assistants

While Gemini today can help rephrase an email or summarize a long article, the long-term vision is far more autonomous. Google is working toward “agentic” AI — systems that don’t just respond, but act on your behalf, anticipate needs, and string together complex tasks. In other words: not just a chatbot, but a digital co-pilot.

A recent blog post on the Google Keyword blog detailed how the assistant would now be more deeply integrated with your browsing context — analyzing the site you’re on and suggesting intelligent prompts.

This comes on the heels of Google’s developer previews of Project Astra, the company’s new real-time, multimodal agent designed to process sight, sound, and language. The agentic idea is that AI should be able to “see” your environment, understand your goals, and take proactive steps — like scheduling, booking, or even composing content — without explicit, step-by-step instructions.

In this following video, Google showed off an assistant identifying objects in real time through a phone camera, understanding code on a screen, and offering proactive help.

The slow play

The Slow Play

While competitors like OpenAI and Anthropic rush to build full-fledged AI agents, Google seems to be playing a long game. Rather than ask users to switch platforms or habits, it’s embedding Gemini inside familiar interfaces — Chrome, Gmail, Docs, and eventually Android.

For now, the Gemini side panel in Chrome can:

- Answer contextual questions about a webpage

- Offer summaries

- Rewrite or adjust content based on user input

The AI isn’t intrusive; it’s helpful — almost shy. But that’s the point.

This approach reflects Google’s strategy of integrating AI capabilities seamlessly into existing products, allowing users to gradually become accustomed to these enhancements without the need for a drastic shift in their user experience.

What’s next?

In the near future, we can expect deeper ties between Gemini and Google’s broader ecosystem. Imagine Gemini checking your calendar, reading your emails, and helping you plan your week — not just in theory, but automatically.

That future isn’t here just yet, but Gemini in Chrome feels like a soft launch for something much bigger. It’s a small window into a future where the browser isn’t just a portal to the web — it’s your AI-powered agent navigating it with you.

So yes, it may just be a sidebar for now. But it might also be the first real sign that Google’s agentic era has quietly begun.